For those who are new to the feature, here's the deal: every week, I break down a topic related to regression to the mean. Some weeks, I'll explain what it is, how it works, why you hear so much about it, and how you can harness its power for yourself. In other weeks, I'll give practical examples of regression at work.

In weeks where I'm giving practical examples, I will select a metric to focus on. I'll rank all players in the league according to that metric and separate the top players into Group A and the bottom players into Group B. I will verify that the players in Group A have outscored the players in Group B to that point in the season. And then I will predict that, by the magic of regression, Group B will outscore Group A going forward.

Crucially, I don't get to pick my samples (other than choosing which metric to focus on). If I'm looking at receivers and Justin Jefferson is one of the top performers in my sample, then Justin Jefferson goes into Group A, and may the fantasy gods show mercy on my predictions.

And then because predictions are meaningless without accountability, I track and report my results. Here's last year's season-ending recap, which covered the outcome of every prediction made in our seven-year history, giving our top-line record (41-13, a 76% hit rate) and lessons learned along the way.

Our Year to Date

Sometimes, I use this column to explain the concept of regression to the mean. In Week 2, I discussed what it is and what this column's primary goals would be. In Week 3, I explained how we could use regression to predict changes in future performance-- who would improve, who would decline-- without knowing anything about the players themselves. In Week 7, I explained why large samples are our biggest asset when attempting to benefit from regression.

Sometimes, I point out broad trends. In Week 5, I shared twelve years worth of data demonstrating that preseason ADP held as much predictive power as performance to date through the first four weeks of the season.

Other times, I use this column to make specific predictions. In Week 4, I explained that touchdowns tend to follow yards and predicted that the players with the highest yard-to-touchdown ratios would begin outscoring the players with the lowest. In Week 6, I explained that yards per carry was a step away from a random number generator and predicted the players with the lowest averages would outrush those with the highest going forward. In Week 8, I broke down how teams with unusual home/road splits usually performed going forward and predicted the Cowboys would be better at home than on the road for the rest of the season.

The Scorecard

| Statistic Being Tracked | Performance Before Prediction | Performance Since Prediction | Weeks Remaining |

|---|---|---|---|

| Yard-to-TD Ratio | Group A averaged 17% more PPG | Group B averages 10% more PPG | None (Win!) |

| Yards per carry | Group A averaged 22% more yards per game | Group B averages 41% more yards per game | 1 |

| Cowboys Point Differential | Cowboys were 90 points better on the road than at home | Cowboys are 6 points better at home than on the road* | 9 |

Our "low volume, high efficiency" Group A has seen its rush attempts decline every week so far (though all three weeks are higher than its pre-prediction baseline). On the other hand, our "high volume, low efficiency" Group B has seen its rush attempts increase every week (again, all three weeks are above the pre-prediction baseline). As a result, Group A has been more and more reliant on maintaining a high ypc to keep pace.

This is bad news because after another big week, Group B has once again passed Group A in yards per carry; our "inefficient" backs are averaging 5.15 ypc since the prediction, while our "efficient" backs are at 4.83.

There's a lesson in here about picking our samples, too. I always say that I don't cherry-pick names; if a player is at the top of a statistic that is traditionally unstable, I will pick that player to regress, even if he's Derrick Henry or Saquon Barkley. It's not especially surprising that those are the two best-performing backs in Group A. But it's much more surprising to see Tank Bigsby and Tyrone Tracy Jr. join them near the top, while J.K. Dobbins and Jordan Mason (who ranked 3rd and 4th in yards per game at the time of the prediction) have both fallen off substantially.

If I were picking and choosing who would regress, I probably would have bet on Dobbins and Mason sustaining their performance and Bigsby and Tracy falling off. I certainly wouldn't have bet on Najee Harris and D'Andre Swift to both average 6 yards per carry and 100 yards per game for Group B. (Harris has topped 100 yards every week since the prediction, tying Joe Mixon for the longest such streak of the season.) This is, of course, precisely why I don't pick and choose who will regress.

Our Cowboys prediction was that they'll play worse on the road than at home (as measured by point differential). It gets an asterisk to this point because they haven't played a home game yet (and they won't play one this week, either). Still, they did suffer their first road loss of the season last week, so that's a start.

Predicting Regression In An Unfamiliar World

I wrote at the beginning of the year that this column has four main goals:

- to persuade you that regression is real and reliable,

- to provide actionable examples to leverage in your fantasy league,

- to educate you on how and why regression is working, and

- to equip you with the tools to find usable examples on your own.

Having (hopefully) made some headway on the first three goals, I want to focus on the fourth.

It's hard enough as a football fan to know whether a "traditional" stat is meaningful or not. ("Meaningful", in this case, means "likely to remain stable going forward".) The research on yards per carry has been clear for decades and I still get pushback when I bring it up.

But we live in a world where new statistics are introduced seemingly every day. There was a time when receiving yards per game was the cutting edge for measuring receiver play. If you really wanted to dig deep, perhaps you'd look into yards per target.

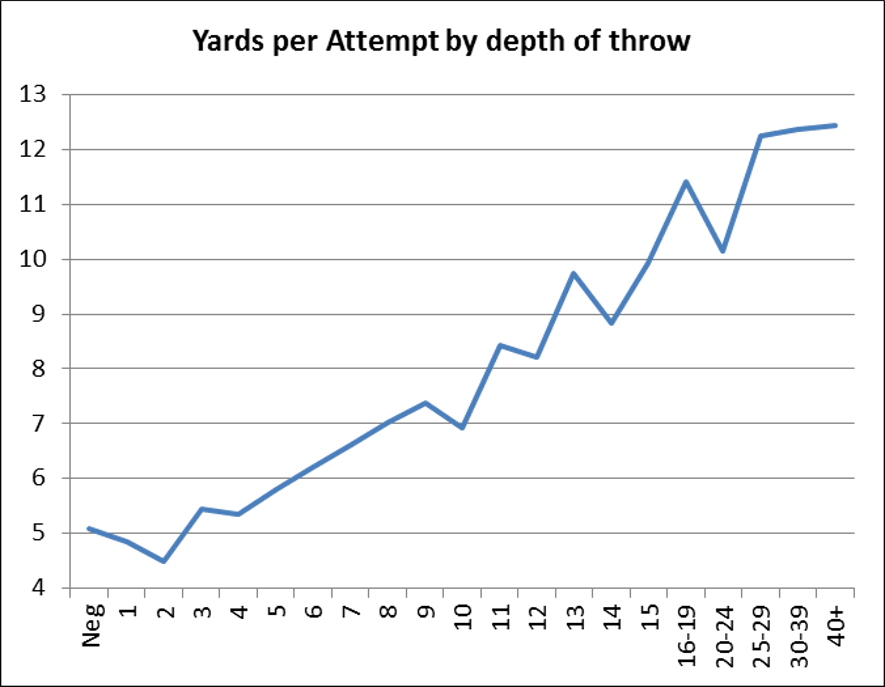

(As a brief aside, yards per target is not a very good efficiency stat. It strongly favors deep threats-- roughly 50% of the variation is explained by a player's yards per reception average. Here is an article from Chase Stuart of Football Perspective illustrating how yards per attempt for quarterbacks-- and by extension, yards per target for receivers-- is largely a function of the depth of the throw.)

After yards per target, people turned to yards per team pass attempt and yards per route run to evaluate receivers. (These are both much better measures of efficiency.) And the options have only further multiplied from there-- fans today who want to know how good a receiver is at getting open can consult NextGenStats' Average Separation, ESPN's Open Score, FantasyPoints Average Separation Score, and Matt Harmon's Reception Perception, just to name four different statistics that purport to measure the same thing but return wildly divergent results.

In a world where we're inundated with ever-more-complicated statistics all claiming to be the newest and best measure of play-- and especially when those stats frequently find themselves in strong disagreement with each other-- how can anyone be an informed consumer?

I don't have a perfect answer to this. But I do have a quick trick that I use to help sort the wheat from the chaff.